Introduction

Assessment is an essential component of teaching and learning, and it provides the instructor with immediate feedback on the quality of instruction. Instructors continuously evaluate a learner’s performance in order to provide guidance, suggestions for improvement, and positive reinforcement.

This chapter examines the instructor’s role when assessing levels of learning, it describes methods of assessment, and it discusses how instructors may construct and conduct effective assessments. Since learners are different and each situation is unique, instructors individualize an assessment as needed. Aviation instructors can use the techniques and methods described in this chapter as appropriate.

Assessment Terminology

This chapter presents two broad categories of assessment. The first is traditional assessment, which often involves the kind of written testing (e.g., multiple choice, matching) and grading that is most familiar to instructors and learners. To achieve a passing score on a traditional assessment, the learner usually has a set amount of time to recognize or reproduce memorized terms, formulas, or data. There is a single answer that is correct. Consequently, the traditional assessment usually assesses the learner’s progress at the rote and understanding levels of learning. However, carefully crafted scenario questions can assess higher levels of learning.

The second category of assessment is authentic assessment. Authentic assessment requires the learner to demonstrate not just rote and understanding, but also the application and correlation levels of learning. Authentic assessment generally requires the learner to perform real-world tasks and demonstrate a meaningful application of skills and competencies. In other words, the authentic assessment requires the learner to exhibit in-depth knowledge by generating a solution instead of merely choosing a response.

In authentic assessment, there are specific performance criteria, or standards, that learners know in advance of the actual assessment.

The terms “criteria/criterion” and “standard” are often used interchangeably. They refer to the characteristics that define acceptable performance on a task. Another term used in association with authentic assessment is “rubric.” A rubric is a guide used to score performance assessments in a reliable, fair, and valid manner. It is generally composed of dimensions for judging learner performance, a scale for rating performances on each dimension, and standards of excellence for specified performance levels.

Whether knowledge or skill, an assessment can be either formal or informal. Formal assessments usually involve documentation, such as a quiz or written examination. They are used periodically throughout a course, as well as at the end of a course, to measure and document whether or not the course objectives have been met. Informal assessments, which can include verbal critique, generally occur as needed and are not part of the final grade.

Other terms associated with assessment include diagnostic, formative, and summative.

⦁ Diagnostic assessments assess learner knowledge or skills prior to a course of instruction.

⦁ Formative assessments, which are not graded, provide a wrap-up of the lesson and set the stage for the next lesson. This type of assessment, limited to what transpired during that lesson, informs the instructor what to reinforce.

⦁ Summative assessments, used periodically throughout the training, measure how well learning has progressed to that point. For example, a stage-check, a chapter quiz, or an end-of-course test can measure the learner’s overall mastery of the training. These assessments are an integral part of the lesson, as well as the course of training.

Purpose of Assessment

An effective assessment provides critical information to both the instructor and the learner. Both instructor and learner need to know how well the learner is progressing. A good assessment provides practical and specific feedback to learners. This includes direction and guidance indicating how they may raise their level of performance. Most importantly, a well-designed and effective assessment provides an opportunity for self-evaluation that enhances the learner’s aeronautical decision-making and judgment skills.

A well-designed assessment highlights the areas in which a learner’s performance is incorrect or inadequate, it helps the instructor see where more emphasis is needed. If, for example, several learners falter when they reach the same step in a weight-and-balance problem, the instructor should recognize the need for a more detailed explanation, another demonstration of the step, or special emphasis in the assessment of subsequent performance.

General Characteristics of Effective Assessment

In order to provide direction and raise the learner’s level of performance, assessment needs to be factual, and it should align with the completion standards of the lesson. An effective assessment displays the characteristics shown in Figure 6-1.

Figure 6-1. Effective assessments share a number of characteristics.

Objective

The personal opinions, likes, dislikes, or biases of the instructor might affect an assessment. A conflict of personalities can alter an opinion. Sympathy or over-identification with a learner, to such a degree that it influences objectivity, is known as “halo error.” To what extent does effective assessment need to focus on objectivity and actual learner performance? If an assessment is to be effective, it needs to be honest; and it must be based on the facts of the performance as they were, not as they could have been.

Flexible

The instructor should evaluate the entire performance of a learner in the context in which it is accomplished. Sometimes a good learner turns in a poor performance, and a poor learner turns in a good one. A friendly learner may suddenly become hostile, or a hostile learner may suddenly become friendly and cooperative. The instructor fits the tone, technique, and content of the assessment to the occasion, as well as to the learner. An assessment should be designed and executed so that the instructor can allow for variables. The ongoing challenge for the instructor is deciding what to say, what to omit, what to stress, and what to minimize at the proper moment.

Acceptable

Consider that learners do not like negative feedback. What makes an honest assessment acceptable to the learner? A certificate or credential alone rarely suffices. Learners need to have confidence in the instructor’s qualifications, teaching ability, sincerity, competence, and authority. Usually, instructors have the opportunity to establish themselves with learners before the formal assessment arises. If not, however, the instructor’s manner, attitude, and familiarity with the subject at hand serves this purpose.

Assessments presented fairly, with authority, conviction, sincerity, and from a position of recognizable competence tend to work well.

Comprehensive

A comprehensive assessment is not necessarily a long one, nor need it treat every aspect of the performance in detail. While it includes strengths as well as weaknesses, the degree of coverage of each should fit the situation. The instructor might report what most needs improvement, or only what the learner can reasonably be expected to improve. The instructor decides whether the greater benefit comes from a discussion of a few major points or a number of minor points.

Constructive

An assessment is pointless unless the learner benefits from it. Praise can capitalize on things that are done well and inspire the learner to improve in areas of lesser accomplishment. When identifying a mistake or weakness, the instructor needs to give positive guidance for correction. Praise for its own sake or negative comments that do not point toward improvement or a higher level of performance should be omitted from an assessment altogether.

Organized

An assessment must be organized. Almost any pattern is acceptable, as long as it is logical and makes sense to the learner. An effective organizational pattern might be the sequence of the performance itself. Sometimes an assessment can begin at the point at which a demonstration failed, and work backward through the steps that led to the failure. A success can be analyzed in similar fashion. Alternatively, a glaring deficiency can serve as the core of an assessment. Breaking the whole into parts, or building the parts into a whole, is another possible organizational approach.

Thoughtful

An effective assessment reflects the instructor’s thoughtfulness toward the learner’s need for self-esteem, recognition, and approval. The instructor refrains from minimizing the inherent dignity and importance of the individual. Ridicule, anger, or fun at the expense of the learner has no place in assessment. While being straightforward and honest, the instructor should always respect the learner’s personal feelings. For example, the instructor should try to deliver criticism in private.

Specific

The instructor’s comments and recommendations should be specific. Learners cannot act on recommendations unless they know specifically what the recommendations are. A statement such as, “Your second weld wasn’t as good as your first,” has little constructive value. Instead, the instructor should say why it was not as good and offer suggestions on how to improve the weld. If the instructor has a clear, well-founded, and supportable idea in mind, it should be expressed with firmness and authority, and in terms that cannot be misunderstood. At the conclusion of an assessment, learners should have no doubt about what they did well and what they did poorly and, most importantly, specifically how they can improve.

Traditional Assessment

As defined earlier, traditional assessment generally refers to written testing, such as multiple choice, matching, true/false, fill in the blank, etc. Learners typically complete written assessments within a specified time. There is a single, correct response for each item. The assessment, or test, assumes that all learners should learn the same thing, and relies on rote memorization of facts. Responses are often machine scored and offer little opportunity for a demonstration of the thought processes characteristic of critical thinking skills.

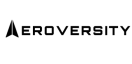

Traditional assessment lends itself to instructor centered teaching styles. The instructor teaches the material at a low level, and the measure of performance is limited. In traditional assessment, fairly simple grading matrices such as shown in Figure 6-2 are used. Due to this approach, a satisfactory grade for one lesson may not reflect a learner’s ability to apply knowledge in a different situation.

Still, tests of this nature do have a place in the assessment hierarchy. Multiple choice, supply type, and other such tests are useful in assessing the learner’s grasp of information, concepts, terms, processes, and rules—factual knowledge that forms the foundation needed for the learner to advance to higher levels of learning.

Characteristics of a Good Written Assessment (Test)

Whether or not an instructor designs his or her own tests or uses commercially available test banks, it is important to know the components of an effective test. (Note: This section is intended to introduce basic concepts of written-test design. Please see Appendix A for testing and test-writing publications.)

Figure 6-2. Traditional grading.

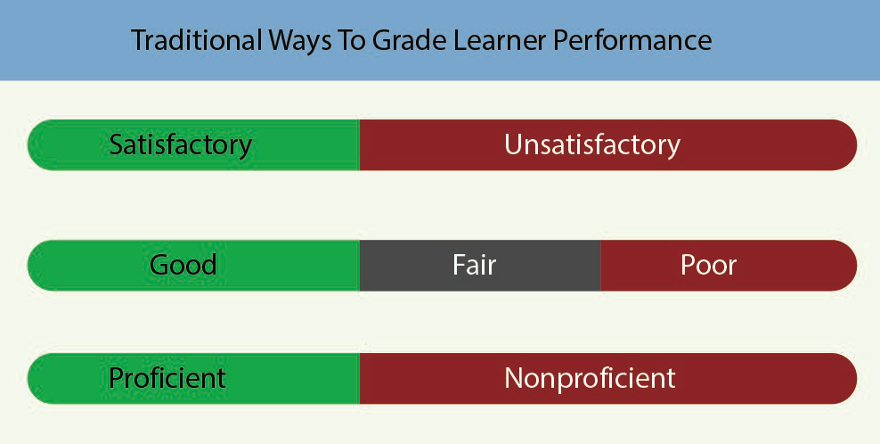

A test is a set of questions, problems, or exercises intended to determine whether the learner possesses a particular knowledge or skill. A test can consist of just one test item, but it usually consists of a number of test items. A test item measures a single objective and calls for a single response. The test could be as simple as the correct answer to an essay question or as complex as completing a knowledge or practical test. Regardless of the underlying purpose, effective tests share certain characteristics. [Figure 6-3]

Reliability is the degree to which test results are consistent with repeated measurements. If identical measurements are obtained every time a certain instrument is applied to a certain dimension, the instrument is considered reliable. The reliability of a written test is judged by whether it gives consistent measurement to a particular individual or group. Keep in mind, though, that knowledge, skills, and understanding can improve with subsequent attempts at taking the same test, because the first test serves as a learning device.

Validity is the extent to which a test measures what it is supposed to measure, and it is the most important consideration in test evaluation. The instructor must carefully consider whether the test actually measures what it is supposed to measure. To estimate validity, several instructors read the test critically and consider its content relative to the stated objectives of the instruction. Items that do not pertain directly to the objectives of the course should be modified or eliminated.

Usability refers to the functionality of tests. A usable written test is easy to give if it is printed in a type size large enough for learners to read easily. The wording of both the directions for taking the test and of the test items needs to be clear and concise. Graphics, charts, and illustrations appropriate to the test items must be clearly drawn, and the test should be easily graded.

Objectivity describes singleness of scoring of a test. Essay questions provide an example of this principle. It is nearly impossible to prevent an instructor’s own knowledge and experience in the subject area, writing style, or grammar from affecting the grade awarded. Selection-type test items, such as true/false or multiple choice, are much easier to grade objectively.

Comprehensiveness is the degree to which a test measures the overall objectives. Suppose, for example, an AMT wants to measure the compression of an aircraft engine. Measuring compression on a single cylinder would not provide an indication of the entire engine. Similarly, a written test must sample an appropriate cross-section of the objectives of instruction. The instructor makes certain the evaluation includes a representative and comprehensive sampling of the objectives of the course.

Discrimination is the degree to which a test distinguishes the difference between learners and may be appropriate for assessment of academic achievement. However, minimum standards are far more important in assessments leading to pilot certification. If necessary for classroom evaluation of academic achievement, a test must measure small differences in achievement in relation to the objectives of the course. A test designed for discrimination contains:

- A wide range of scores

- All levels of difficulty

- Items that distinguish between learners with differing levels of achievement of the course objectives

Please see Appendix B for information on the advantages and disadvantages of multiple choice, supply type, and other written assessment instruments, as well as guidance on creating effective test items.

Authentic Assessment

Authentic assessment asks the learner to perform real-world tasks and demonstrate a meaningful application of skills and competencies. Authentic assessment lies at the heart of training today’s aviation learner to use critical thinking skills. Rather than selecting from predetermined responses, learners must generate responses from skills and concepts they have learned. By using openended questions and established performance criteria, authentic assessment focuses on the learning process, enhances the development of real-world skills, encourages higher order thinking skills, and teaches learners to assess their own work and performance.

Learner-Centered Assessment

There are several aspects of effective authentic assessment. The first is the use of open-ended questions in what might be called a “collaborative critique,” which is a form of learner-centered grading. As described in the scenario that introduced this chapter, the instructor begins by using a four-step series of open-ended questions to guide the learner through a complete self-assessment.

Replay—the instructor asks the learner to verbally replay the flight or procedure. While the learner speaks, the instructor listens for areas where the account does not seem accurate. At the right moment, the instructor discusses any discrepancy with the learner. This approach gives the learner a chance to validate his or her own perceptions, and it gives the instructor critical insight into the learner’s judgment abilities.

Reconstruct—the reconstruction stage encourages learning by identifying the key things that the learner would have, could have, or should have done differently during the flight or procedure.

Reflect—insights come from investing perceptions and experiences with meaning, requiring reflection on the events. For example:

- What was the most important thing you learned today?

- What part of the session was easiest for you? What part was hardest?

- Did anything make you uncomfortable? If so, when did it occur?

- How would you assess your performance and your decisions?

- How did your performance compare to the standards in the ACS?

Redirect—the final step is to help the learner relate lessons learned in this session to other experiences and consider how they might help in future sessions. Questions might include:

⦁ How does this experience relate to previous lessons?

⦁ What might be done to mitigate a similar risk in a future situation?

⦁ Which aspects of this experience might apply to future situations, and how?

⦁ What personal minimums should be established, and what additional proficiency flying and/or training might be useful?

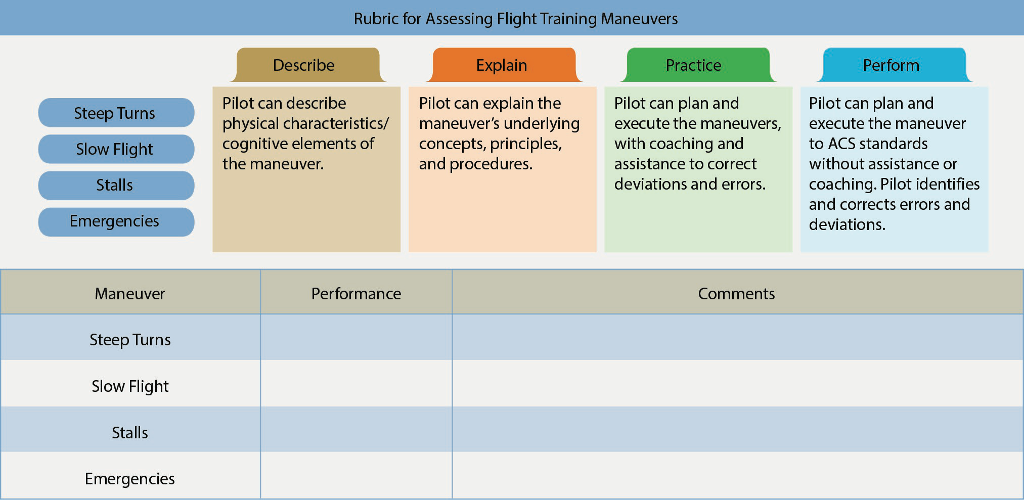

Any self-assessment stimulates growth in the learner’s thought processes and, in turn, behaviors. An in-depth discussion between the instructor and the learner may follow, which compares the instructor’s assessment to the learner’s self-assessment. Through this discussion, the instructor and the learner jointly determine the learner’s progress. The progress may be recorded on a rubric as part of a training program. As explained earlier, a rubric is a guide for scoring performance assessments in a reliable, fair, and valid manner. It is generally composed of dimensions for judging learner performance, a scale for rating performances on each dimension, and standards of excellence for specified performance levels.

The collaborative assessment process in learner-centered grading uses two broad rubrics: one that assesses the learner’s level of proficiency on skill-focused maneuvers or procedures, and one that assesses the learner’s level of proficiency on single-pilot resource management (SRM), which is the cognitive or decision-making aspect of flight training.

The performance assessment dimensions for each type of rubric are as follows:

Maneuver or Procedure “Grades”

⦁ Describe—at the completion of the scenario, the learner is able to describe the physical characteristics and cognitive elements of the scenario activities but needs assistance to execute the maneuver or procedure successfully.

⦁ Explain—at the completion of the scenario, the learner is able to describe the scenario activity and understand the underlying concepts, principles, and procedures that comprise the activity, but needs assistance to execute the maneuver or procedure successfully.

⦁ Practice—at the completion of the scenario, the learner is able to plan and execute the scenario. Coaching, instruction, and/or assistance will correct deviations and errors identified by the instructor.

⦁ Perform—at the completion of the scenario, the learner is able to perform the activity without instructor assistance. The learner will identify and correct errors and deviations in an expeditious manner. At no time will the successful completion of the activity be in doubt. (“Perform” is used to signify that the learner is satisfactorily demonstrating proficiency in traditional piloting and systems operation skills).

⦁ Not observed—any event not accomplished or required.

For example, a learner can describe a landing and can tell the flight instructor about the physical characteristics and appearance of the landing. On a good day, with the wind straight down the runway, the learner may be able to practice landings with some success while still functioning at the rote level of learning. However, on a gusty crosswind day the learner needs a deeper level of understanding to adapt to the different conditions. If a learner can explain all the basic physics associated with lift/drag and crosswind correction, he or she is more likely to practice successfully and eventually perform a landing under a wide variety of conditions.

Assessing Risk Management Skills

⦁ Explain—the learner can verbally identify, describe, and understand the risks inherent in the flight scenario, but needs to be prompted to identify risks and make decisions.

⦁ Practice—the learner is able to identify, understand, and apply SRM principles to the actual flight situation. Coaching, instruction, and/or assistance quickly corrects minor deviations and errors identified by the instructor. The learner is an active decision maker.

⦁ Manage-Decide—the learner can correctly gather the most important data available both inside and outside the flight deck, identify possible courses of action, evaluate the risk inherent in each course of action, and make the appropriate decision. Instructor intervention is not required for the safe completion of the flight.

In SRM, the learner may be able to describe basic SRM principles during the first flight. Later, he or she is able to explain how SRM applies to different scenarios that are presented on the ground and in the air. When the learner actually begins to make quality decisions based on good SRM techniques, he or she earns a grade of manage-decide. The advantage of this type of grading is that both flight instructor and learner know exactly where the learning has progressed.

Let’s look at how the rubric in Figure 6-4 might be used in a flight training scenario. During the postflight debriefing, flight instructor Linda asks her learner, Brian, to assess his performance for the day using the Replay, Reconstruct, Reflect, and Redirect guided discussion questions described in the Learner-Centered Assessment section presented earlier in this chapter. Based on this assessment, she and Brian discuss where Brian’s performance falls in the rubrics for maneuvers/procedures and SRM. This part of the assessment may be verbally discussed or, alternatively, Brian and Linda separately create an assessment sheet for each element of the flight.

Figure 6-4. Rubric for assessing flight training maneuvers.

When Brian studies the performance levels, he decides he was at the “Perform” level since he had not made any mistakes. Where he had rated the item as “Perform,” Linda had rated it as “Practice.” During the ensuing discussion, Brian understands where he needs more practice before his performance is at the “Perform” level.

This approach to assessment has several key advantages. One is that it actively involves the learner in the assessment process and establishes the habit of healthy reflection and self-assessment that is critical to being a safe pilot. Another is that these grades are not self-esteem related, since they do not describe a recognized level of prestige (such as A+ or “Outstanding”), but rather a level of performance. The learner cannot flunk a lesson. Instead, he or she demonstrates a particular level of flight and SRM skills.

Both instructors and learners may initially be reluctant to use this method of assessment. Instructors may think it requires more time, when in fact it is merely a more structured, effective, and collaborative version of a traditional postflight critique. Also, instructors who learned in the more traditional assessment structure must be careful not to equate or force the dimensions of the rubric into the traditional grading mold of A through F. One way to avoid this temptation is to remember that evaluation should be progressive: the learner may achieve a new level of learning during each lesson. For example, in flight one, a task might be a “describe” item. By flight three, it is a “practice” item, and by flight five, it is a “manage-decide” item.

The learner may be reluctant to self-assess if he or she has not had the chance to participate in such a process before. Therefore, the instructor may need to teach the learner how to become an active participant in the collaborative assessment.

Choosing an Effective Assessment Method

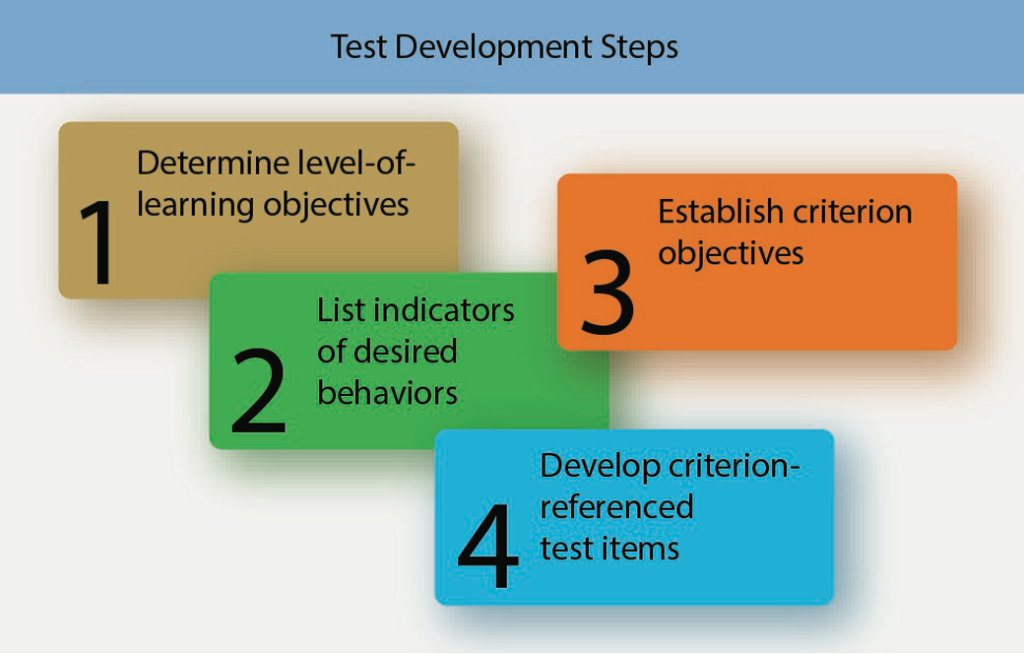

When deciding how to assess learner progress, aviation instructors can follow a four-step process.

- Determine level-of-learning objectives.

- List indicators of desired behaviors.

- Establish criterion objectives.

- Develop criterion-referenced test items.

This process is useful for tests that apply to the cognitive and affective domains of learning and also can be used for skill testing in the psychomotor domain. The development process for criterion-referenced tests follows a general-to-specific pattern. [Figure 6-5]

Figure 6-5. The development process for criterion-referenced tests follows a general-to-specific pattern.

Instructors should be aware that authentic assessment may not be as useful as traditional assessment in the early phases of training, because the learner does not have enough information about the concepts or knowledge to participate fully. As discussed in Chapter 3, The Learning Process, when exposed to a new topic, learners first tend to acquire and memorize facts. As learning progresses, they begin to organize their knowledge to formulate an understanding of the things they have memorized. When learners possess the knowledge needed to analyze, synthesize, and evaluate (i.e., application and correlation levels of learning), they can participate more fully in the assessment process.

Determine Level-of-Learning Objectives

The first step in developing an appropriate assessment is to state the individual objectives as general, level-of-learning objectives. The objectives should measure one of the learning levels of the cognitive, affective, or psychomotor domains described in Chapter 3. The levels of cognitive learning include knowledge, comprehension, application, analysis, synthesis, and evaluation.

For the understanding level, an objective could be stated as, “Describe how to perform a compression test on an aircraft reciprocating engine.” This objective requires a learner to explain how to do a compression test, but not necessarily perform a compression test (application level). Further, the learner would not be expected to compare the results of compression tests on different engines (application level), design a compression test for a different type of engine (correlation level), or interpret the results of the compression test (correlation level). A general level-of-learning objective is a good starting point for developing a test because it defines the scope of the learning task.

List Indicators/Samples of Desired Behaviors

The second step is to list the indicators or samples of behavior that give the best indication of the achievement of the objective. The instructor selects behaviors that can be measured and which give the best evidence of learning. For example, if the instructor expects the learner to display the understanding level of learning on compression testing, some of the specific test question answers should describe appropriate tools and equipment, the proper equipment setup, appropriate safety procedures, and the steps used to obtain compression readings. The overall test must be comprehensive enough to give a true representation of the learning to be measured. It is not usually feasible to measure every aspect of a level of learning objective, but by carefully choosing samples of behavior, the instructor can obtain adequate evidence of learning.

Establish Criterion Objectives

The next step in the test development process is to define criterion (performance-based) objectives. In addition to the behavior expected, criterion objectives state the conditions under which the behavior is to be performed, and the criteria that must be met. If the instructor developed performance-based objectives during the creation of lesson plans, criterion objectives have already been formulated. The criterion objective provides the framework for developing the test items used to measure the level of learning objectives. In the compression test example, a criterion objective to measure the understanding level of learning might be stated as,

“The learner will demonstrate understanding of compression test procedures for reciprocating aircraft engines by completing a quiz with a minimum passing score of 70 percent.”

Develop Criterion-Referenced Assessment Items

The last step is to develop criterion-referenced assessment items. The development of written test questions is covered in the reference section. While developing written test questions, the instructor should attempt to measure the behaviors described in the criterion objective(s). The questions in the exam for the compression test example should cover all of the areas necessary to give evidence of understanding the procedure. The results of the test (questions missed) identify areas that were not adequately covered.

Performance-based objectives serve as a reference for the development of test items. If the test is the pre-solo knowledge test, the objectives are for the learner to understand the regulations, the local area, the aircraft type, and the procedures to be used. The test should measure the learner’s knowledge in these specific areas. Individual instructors should develop their own tests to measure the progress of their learners. If the test is to measure the readiness of a learner to take a knowledge test, it should be based on the objectives of all the lessons the learner has received.

Aviation training also involves performance tests for maneuvers or procedures. The flight instructor does not administer the practical test for a pilot certificate, nor does the aviation maintenance instructor administer the oral and practical exam for certification as an aviation maintenance technician (AMT). However, aviation instructors do get involved with the same skill or performance testing that is measured in these tests. Performance testing is desirable for evaluating training that involves an operation, a procedure, or a process. The job of the instructor is to prepare the learner to take these tests. Therefore, each element of the practical test should be evaluated prior to sending an applicant for the practical exam.

Practical tests for maintenance technicians and pilots are criterion-referenced tests. The practical tests, defined in the Airman Certification Standards and Practical Test Standards (ACS/PTS), are criterion referenced because the objective is for all successful applicants to meet the high standards of knowledge, skill, and safety required by the regulations. The purpose of the ACS/PTS is to delineate the standards by which FAA inspectors, designated pilot examiners (DPEs), and designated maintenance examiners (DMEs) conduct tests for ratings and certificates. The standards reflect the requirements of Title 14 of the Code of Federal Regulations (14 CFR) parts 61, 65, 91, and other FAA publications, including the Aeronautical Information Manual (AIM) and pertinent advisory circulars and handbooks.

The objectives in the ACS/PTS ensure the certification of pilots and maintenance technicians at a high level of performance and proficiency, consistent with safety. The ACS/PTS for aeronautical certificates and ratings include areas of operation and tasks that reflect the requirements of the FAA publications mentioned above. Areas of operation define phases of the practical test arranged in a logical sequence within each standard. They usually begin with preflight preparation and end with postflight procedures. Tasks are titles of knowledge areas, flight procedures, or maneuvers appropriate to an area of operation. Included are references to the applicable regulations or publications. Private pilot applicants are evaluated in all tasks of each area of operation. Flight instructor applicants are evaluated on one or more tasks in each area of operation. In addition, certain tasks are required to be covered and are identified by notes immediately following the area of operation titles.

Since evaluators may cover every task in the ACS/PTS on the practical test, the instructor should evaluate all of the tasks before recommending the maintenance technician or pilot applicant for the practical test. While this evaluation is not necessarily formal, it should adhere to criterion-referenced testing.

Critiques and Oral Assessments

Used in conjunction with either traditional or authentic assessment, the critique is an instructor-to-learner assessment. These methods can also be used either individually, or in a classroom setting.

The word critique sometimes has a negative connotation, and the instructor needs to avoid using this method as an opportunity to be overly critical of learner performance. An effective critique considers good as well as bad performance, the individual parts, relationships of the individual parts, and the overall performance. A critique can and usually should be as varied in content as the performance being evaluated.

A critique may be oral, written, or both. It should come immediately after a learner’s performance, while the details of the performance are easy to recall. An instructor may critique any activity a learner performs or practices to improve skill, proficiency, and learning. A critique may be conducted privately or before the entire class. A critique presented before the entire class can be beneficial to every learner in the classroom, as well as to the learner who performed the exercise or assignment. In this case, however, the instructor should avoid embarrassing the learner in front of the class.

There are several useful ways to conduct a critique.

Instructor/Learner Critique

The instructor leads a group discussion in an instructor/learner critique in which members of the class are invited to offer criticism of a performance. This method should be controlled carefully and directed with a clear purpose. It should be organized, and not allowed to degenerate into a random free-for-all.

Learner-Led Critique

The instructor asks a learner to lead the assessment in a learner-led critique. The instructor can specify the pattern of organization and the techniques or can leave it to the discretion of the chosen leader. Because of the inexperience of the participants in the lesson area, learner-led assessments may not be efficient, but they can generate learner interest and learning.

Small Group Critique

For the small group critique, the class is divided into small groups, each assigned a specific area to analyze. Each group presents its findings to the class. It is desirable for the instructor to furnish the criteria and guidelines. The combined reports from the groups can result in a comprehensive assessment.

Individual Learner Critique by Another Learner

The instructor may require another learner to present the entire assessment. A variation is for the instructor to ask a number of learners questions about the manner and quality of performance. Discussion of the performance and of the assessment can often allow the group to accept more ownership of the ideas expressed. As with all assessments incorporating learner participation, it is important that the instructor maintain firm control over the process.

Self-Critique

A learner critiques personal performance in a self-critique. Like all other methods, a self-critique receives control and supervision from the instructor.

Written Critique

A written critique has three advantages. First, the instructor can devote more time and thought to it than to an oral assessment in the classroom. Second, learners can keep written assessments and refer to them whenever they wish. Third, when the instructor asks all learners to write an assessment of a performance, the learner-performer has the permanent record of the suggestions, recommendations, and opinions of all the other learners. The disadvantage of a written assessment is that other members of the class do not benefit.

Whatever the type of critique, the instructor should resolve disagreements and correct erroneous impressions. The instructor also makes allowances for the learners’ relative inexperience. Normally, the instructor should reserve time at the end of the learner assessment to cover those areas that might have been omitted, not emphasized sufficiently, or considered worth repeating.

Oral Assessment

The most common means of assessment is direct or indirect oral questioning of learners by the instructor. Questions may be loosely classified as fact questions and HOTS questions. The answer to a fact question is based on memory or recall. This type of question usually concerns who, what, when, and where. HOTS questions involve why or how and require the learner to combine knowledge of facts with an ability to analyze situations, solve problems, and arrive at conclusions.

Proper quizzing by the instructor can have a number of desirable results, such as:

- Reveals the effectiveness of the instructor’s training methods

- Checks learner retention of what has been learned

- Reviews material already presented to the learner

- Can be used to retain learner interest and stimulate thinking

- Emphasizes the important points of training

- Identifies points that need more emphasis

- Checks comprehension of what has been learned

- Promotes active learner participation, which is important to effective learning

Characteristics of Effective Questions

The instructor should devise and write pertinent questions in advance. One method is to place them in the lesson plan. Prepared questions merely serve as a framework, and as the lesson progresses, should be supplemented by such impromptu questions as the instructor considers appropriate. Objective questions have only one correct answer, while the answer to an open-ended HOTS question can be expressed in a variety of possible solutions.

To be effective, questions must:

- Apply to the subject of instruction.

- Be brief and concise, but also clear and definite.

- Be adapted to the ability, experience, and stage of training of the learners.

- Center on only one idea (limited to who, what, when, where, how, or why, not a combination).

- Present a challenge to the learners.

Types of Questions to Avoid

Effective quizzing does not include yes/no questions such as “Do you understand?” or “Do you have any questions?” Instructors should also avoid the following types of questions:

⦁ Puzzle—“What is the first action you should take if a conventional gear airplane with a weak right brake is swerving left in a right crosswind during a full flap, power-on wheel landing?” ⦁ Oversize—“What do you do before beginning an engine overhaul?”

⦁ Toss-up—“In an emergency, should you squawk 7700 or pick a landing spot?”

⦁ Bewilderment—“In reading the altimeter—you know you set a sensitive altimeter for the nearest station pressure—if you take temperature into account, as when flying from a cold air mass through a warm front, what precaution should you take when in a mountainous area?”

⦁ Trick questions—these questions cause the learners to develop the feeling that they are engaged in a battle of wits with the instructor, and the whole significance of the subject of the instruction involved is lost. An example of a trick question would be one in which the response options are 1, 2, 3, and 4, but they are placed in the following form:

- 4

- 3

- 2

- 1

⦁ Irrelevant questions—diversions that introduce only unrelated facts and thoughts and slow the learner’s progress. Questions unrelated to the test topics are not helpful in evaluating the learner’s knowledge of the subject at hand. An example of an irrelevant question would be to ask a question about tire inflation during a test on the timing of magnetos.

Answering Learner Questions

Tips for responding effectively to learner questions, especially in a classroom setting:

1. Be sure that you clearly understand the question before attempting to answer. 2. Display interest in the learner’s question and frame an answer that is as direct and accurate as possible.

3. After responding, determine whether or not the learner is satisfied with the answer.

Sometimes it is unwise to introduce considerations more complicated or advanced than necessary to completely answer a learner’s question at the current point in training. In this case, the instructor should carefully explain to the learner that the question was good and pertinent, but that a detailed answer would, at this time, unnecessarily complicate the learning tasks. The instructor should invite the learner to reintroduce the question later at the appropriate point in training.

Occasionally, a learner asks a question that the instructor cannot answer. In such cases, the instructor should freely admit not knowing the answer, but should promise to get the answer or, if practicable, offer to help the learner look it up in available references.

Scenario-Based Training

Since flying has become more complex, the focus of training needs to address pilot decision-making and risk management, a concept the authors call Single Pilot Resource Management (SRM). Since SRM training requires practicing the decision-making process in real time and in real situations, a new form of GA training that goes beyond the traditional task and maneuver-based training is recommended. The FITS program emphasizes combining traditional task and maneuver training with Scenario Based Training (SBT) to teach the advanced pilot judgment and risk management skills required in the SRM environment.

In many respects, scenario-based training is not a new concept. Experienced flight instructors have been using scenarios to teach cross country operations, emergency procedures, and other flight skills for years. Scenario-based training puts the learner pilot into the normal cross-country environment much earlier than traditional flight training programs. The goal is to begin training the pilot, through meaningful repetition, how to gather information and make informed and timely decisions. We routinely refer to this process as “experience.” Scenario-based learning does not preclude traditional maneuver-based training. Rather, flight maneuvers are integrated into the flight scenarios and conducted, as they would occur in the real world. Those maneuvers requiring repetition may still be taught during concentrated settings. However, once they are learned, they are then integrated into realistic flight situations.

The flight instructor is crucial to the implementation of a scenario-based training program. In this capacity, an instructor serves in the learning environment as an advisor and guide for the learner. The duties, responsibilities, and authority of the flight instructor include the following:

⦁ Orient new learners to the scenario-based training system.

⦁ Help the learner become a confident planner and in-flight manager of each flight and a critical evaluator of their own performance.

⦁ Help the learner understand the knowledge requirements present in real world applications.

⦁ Diagnose learning difficulties and help the individual overcome them.

⦁ Be able to evaluate learner progress and maintain appropriate records.

⦁ Provide continuous review of learning.

The flight instructor is the key to success, and different instructional techniques are required for successful SBT. Remembering that the learning objective is for the learner to be more ready to exercise sound judgment and make good decisions; the flight instructor turns the responsibility for planning and execution of the flight over to the learner as soon as possible. The flight instructor will continue to demonstrate and instruct skill maneuvers in the traditional manner, however, when the learner begins to make decisions the flight instructor should revert to the role of mentor and/or learning facilitator.

Each situation a learner faces may not have one right, and one wrong answer. Instead, learners will encounter situations in training that may have several “good” outcomes as well as the potential for some “poor” ones. While the flight instructor should understand in advance which outcomes are positive or negative, the instructor allows the learner to make both good and poor decisions. This does not mean that the learner should be allowed to make an unsafe decision or commit an unsafe act. However, it does allow the learner to make decisions that fit their experience level and result in positive outcomes.

Chapter Summary

This chapter described the methods and techniques aviation instructors use to assess learner progress. A well-designed and timely assessment defines what is worth knowing, enhances motivation, and improves learning. Today’s learners want to know the criteria by which they are assessed, and they want practical and specific feedback. Aviation instructors familiar with different types of assessments know how and when to use them to promote a productive learning environment.